Visual Object Networks: Image Generation with Disentangled 3D Representation

Jun-Yan Zhu

Zhoutong Zhang

Chengkai Zhang

Jiajun Wu

Antonio Torralba

Joshua B. Tenenbaum

William T. Freeman

MIT CSAIL Google Research

In NeurIPS 2018

Paper | Code

Abstract

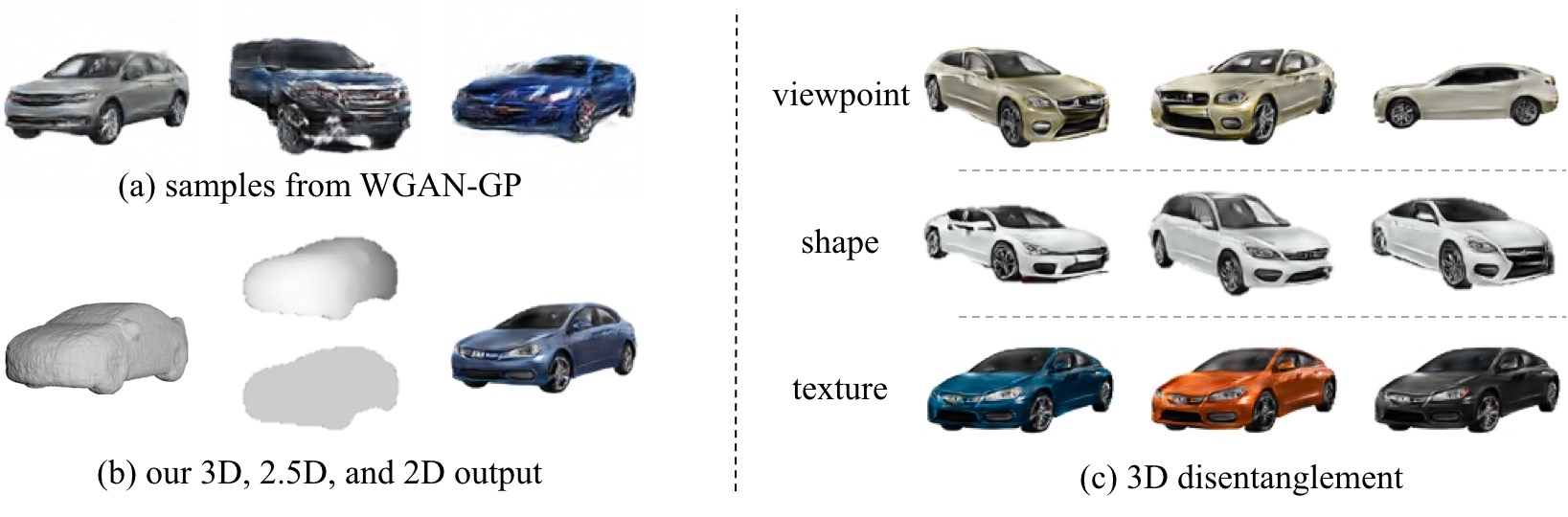

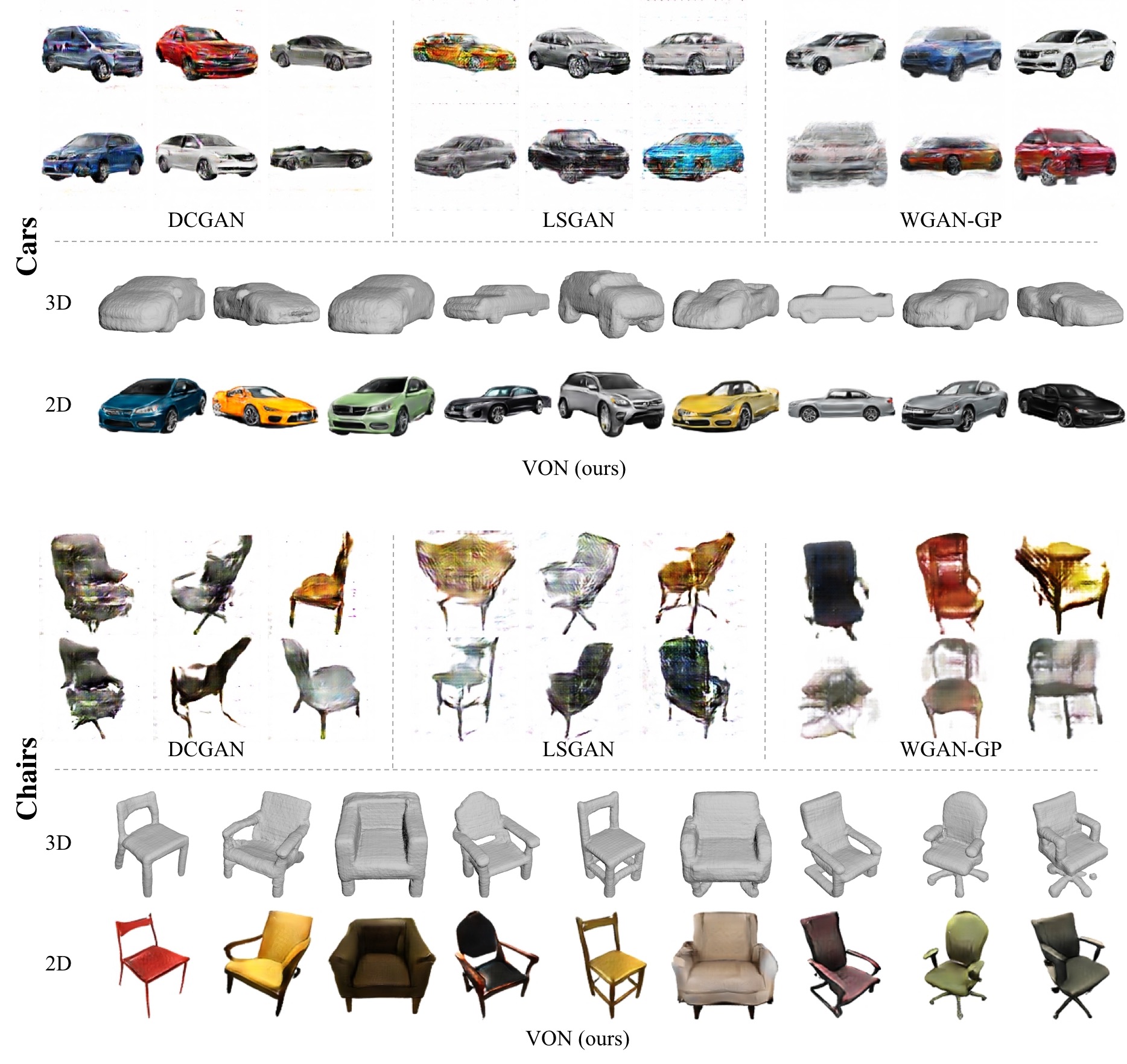

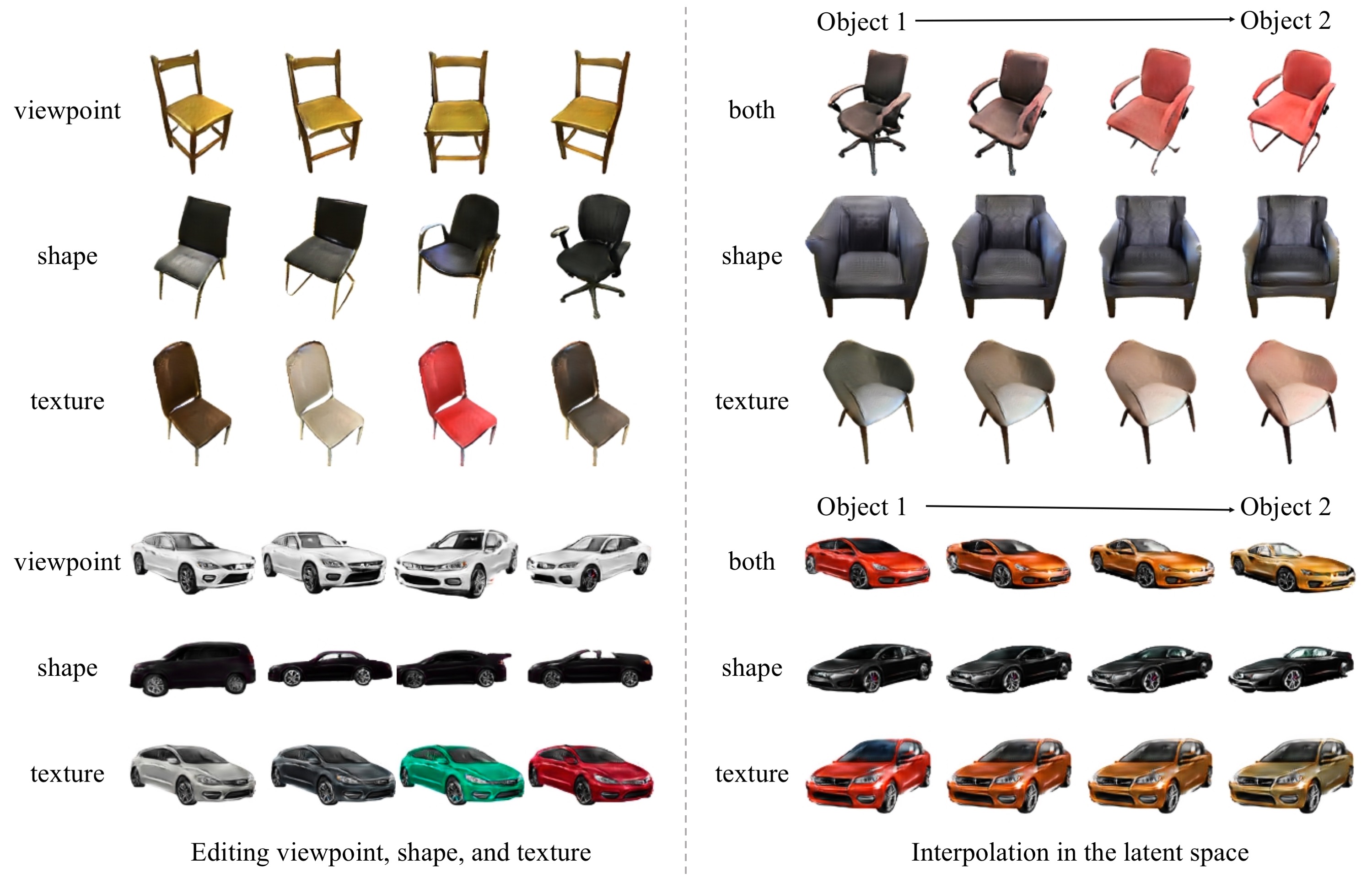

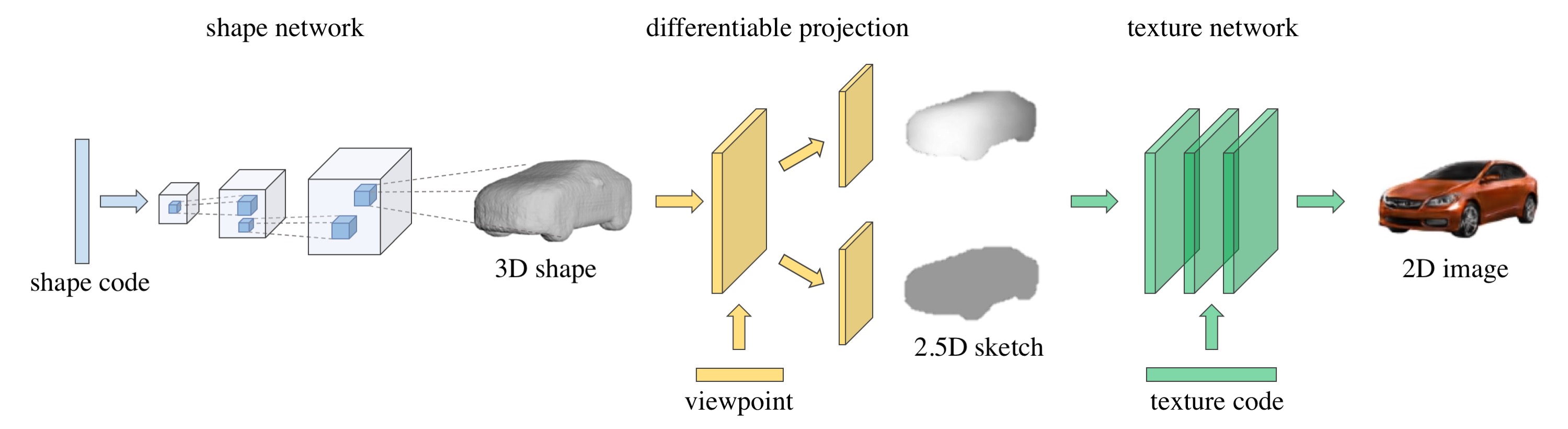

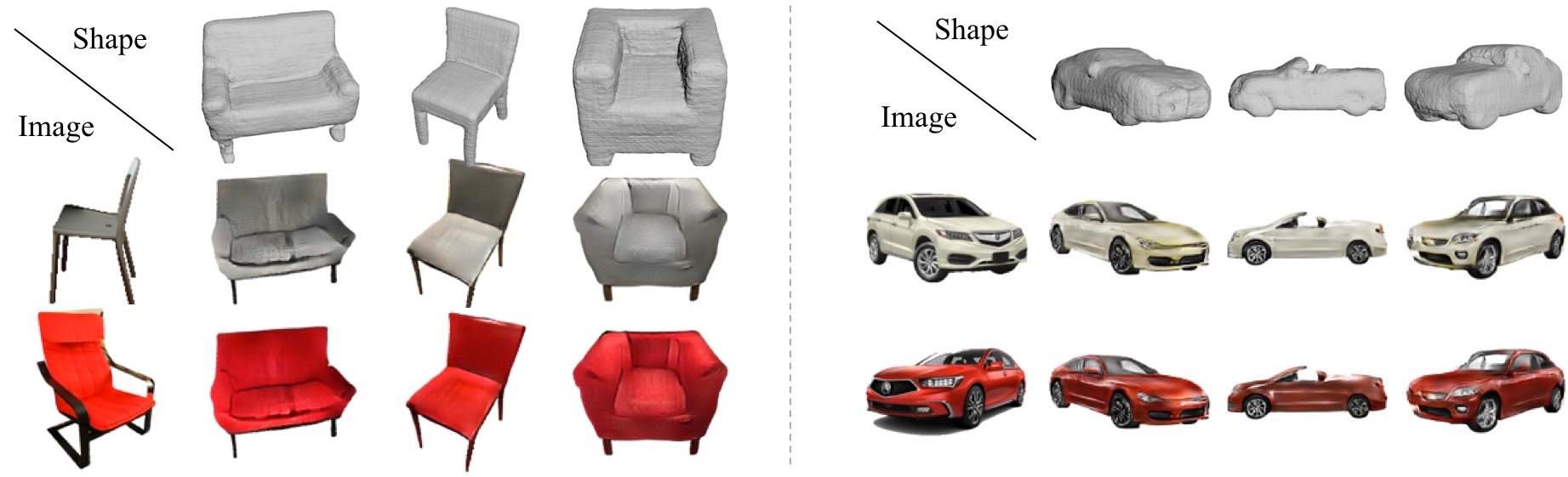

Recent progress in deep generative models has led to tremendous breakthroughs in image generation. However, while existing models can synthesize photorealistic images, they lack an understanding of our underlying 3D world. We present a new generative model, Visual Object Networks (VON), synthesizing natural images of objects with a disentangled 3D representation. Inspired by classic graphics rendering pipelines, we unravel our image formation process into three conditionally independent factors---shape, viewpoint, and texture---and present an end-to-end adversarial learning framework that jointly models 3D shapes and 2D images. Our model first learns to synthesize 3D shapes that are indistinguishable from real shapes. It then renders the object's 2.5D sketches (i.e., silhouette and depth map) from its shape under a sampled viewpoint. Finally, it learns to add realistic texture to these 2.5D sketches to generate natural images. The VON not only generates images that are more realistic than state-of-the-art 2D image synthesis methods, but also enables many 3D operations such as changing the viewpoint of a generated image, editing of shape and texture, linear interpolation in texture and shape space, and transferring appearance across different objects and viewpoints.

Paper

NeurIPS, 2018

Citation

Jun-Yan Zhu, Zhoutong Zhang, Chengkai Zhang, Jiajun Wu, Antonio Torralba, Joshua B. Tenenbaum, and William T. Freeman. "Visual Object Networks: Image Generation withDisentangled 3D Representation", in Neural Information Processing Systems (NeurIPS), 2018. Bibtex

Code: PyTorch

Comparisons between 2D GANs vs. VON

3D Object Manipulations

Texture Transfer across Objects and Viewpoints

|

VON can transfer the texture of a real image to different shapes and viewpoints. |

|

Poster

NeurIPS 2018

Acknowledgement

This work is supported by NSF #1231216, NSF #1524817, ONR MURI N00014-16-1-2007, Toyota Research Institute, Shell, and Facebook. We thank Xiuming Zhang, Richard Zhang, David Bau, and Zhuang Liu for valuable discussions.